The Dataset

This multi-sensory tactile dataset captures the full richness of human touch - not just pressure, but the complete sensory experience of exploring textured surfaces.

What We Capture

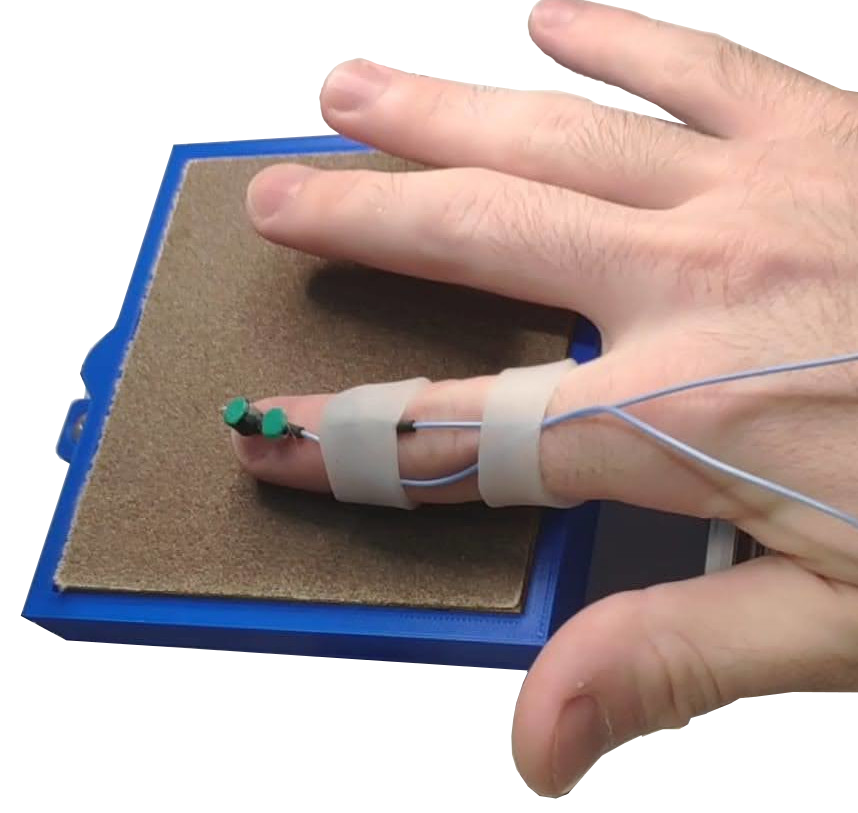

Haptic Signals

-

Friction-induced vibrations - sensed by accelerometers

-

Applied load - force/torque measurements

-

Fingertip position - precise tracking

-

Exploration speed - velocity data

Audio Signals

-

Directional microphones - capturing sound of finger-surface interaction

-

Synchronized recording - aligned with other modalities

Visual Signals

-

Stereoscopic images - high-resolution 4K cameras

-

Multiple angles - comprehensive visual coverage

Data Collection

Participants

-

10 participants providing diverse interaction styles

-

Controlled conditions for reproducibility

Surfaces

-

10 textured surfaces representing different materials

-

Varied roughness, patterns, and materials

Scenarios

-

Free exploration - natural, unconstrained interaction

-

Controlled exploration - systematic parameter variation

Research Applications

Human Perception Studies

Understanding how humans integrate multiple senses to perceive texture:

-

Which modalities are most important?

-

How do they combine?

-

What makes textures distinguishable?

Haptic Feedback Design

Informing the design of haptic systems:

-

What signals need to be reproduced?

-

Which are most salient?

-

How accurate must reproduction be?

AI-Driven Texture Recognition

Training machine learning models:

-

Our classifier achieved high accuracy across modality combinations

-

Demonstrates potential for robotic texture recognition

-

Enables human-machine interaction applications

The Bigger Picture

This dataset advances our understanding of tactile perception - knowledge essential for creating prosthetics and robotic systems that can truly feel.